Table of Contents

Glossary

Specification

a document that describes the requirements for the SOFTWARE or functionality.

Error (bag)

The mismatch between expected result

Acceptance testing

Showing compliance with software specification and implementation

Test operation

Full-fledged work at which all functionality of the new software is checked.

The basic group of tests

dynamic testing

Checking your code at run time

static testing

Analyze code without executing it

Purpose of tests

testing the code

Finding errors and checking the correct implementation of code algorithms

interface testing

Check user input, input modes, data display. When testing an interface, it is possible to test the internal code and algorithms, but interface testing cannot be used to perform a full-fledged test quickly and efficiently.

Types of tests

Modular

Tests are grouped by modules, the so-called unit testing

Functional

Tests are grouped by functionality and depend on the software project

With documents:

* Price list

* Invoices

* Accounts

* Warehouse operations

Work with transport:

* email

* tcp/ip

* ftp

With data warehouse

* SQL

* oracle

* firebird

* file

* local

* Network File System

* Cloud

Integration

Test multiple applications simultaneously

Graduation is a time overlap tests

Early testing

Warning, planned imposition of tests, before writing the code. Tests are written before the code is written. Used to write code by specification.

Current testing

Tests are imposed at the time of writing the code or immediately after the end. Tests are used to test and debug functionality, is a set of data, mainly for debugging functionality.

Later testing

Test coverage of long-written functionality. Is used mostly when is happening accumulation defects “snowball” and company is limited in any resources (temporary, financial, human). Or when the company applies a quality control system.

The levels work with the data

Test data

- prepared for functional tests

- clone of working data on which will be tested to find elusive errors or testing new functionality.

Reference data

Prepared pseudo-working data. This is the correct data for acceptance testing, showing compliance with the specification and implementation of the software.

Operational data

- situations when it is impossible to detect an error on the test and reference data and it is not possible to copy the data to the test store (database).

- test operation

Checking the result

Positive result

Если результат выполнения функционала совпадает с эталоном, то тест считает пройденным

if (2+2=4) then result:=trOk;

Negative result

Когда результат выполнения отличается эталона, то это считается ошибкой

if (2+2=5) then result:=trError;

Positive if negative result

The condition under which the functional must return an error when executed. If the test returned an error, the test is passed.

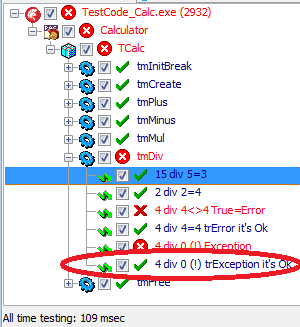

Example: 4/0=4 there must be a division by zero error If the code under test returns Exception, means test will pass. When you specify registration IRegisterMethodParams you must specify that there should be an exception trException

// Registration method IRegisterMethod(tmDiv ,'tmDiv' ,'Test Div operation');// Test methods // Registration params IRegisterMethodParams([4,0],[4],'4 div 0 = 4 (!) trException it''s Ok',trException); // Exception = Ok

Test execution modes

Manual

All tests are performed using manual actions only.

Automatic

Tests are run only programmatically

Mixed

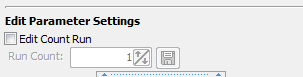

Small manual changes in automated tests. For example in TestCode during testing you can change the number of repetitions

Test execution order

Serial

All tests are run in order.

Random

Performed in random order. Random execution can be of several kinds:

- Randomly within one

- method

- class's

- unit

- module's

- Random execution in integration testing

In turn

This test execution order applies to integration testing

Performance

Check for compliance with technical requirements, conduct a performance degradation assessment: A graph is drawn up, the limits of effective work, identifying critical boundaries, calculating the exponent of reduced performance, determining the weakest link, finding solutions if load testing does not meet the requirements

Speed

Checking how fast the work with the processing and transmission of data with the reference volume is performed:

Load

Speed is tested with increasing data volume

Stress-testing

Check for an increase in the number of concurrent instances of the software

Testing development tools and operational environments

inner part

What depends on us

- libraries, third-party components with source code

- native libraries and components

- modules of the developed software

outer part

We can't explicitly affect the reduction and correction of errors, but we can test for the parameters we need

- HardWare testing

- performance

- пsupport for instructions used

- operation of the graphics system

- network equipment

- SoftWare testing

- testing the operating system

- work of the operating system

- use a database

- Testing the environment

- with third party programs installed by the user

- development too

- compiler

- non-source components and libraries